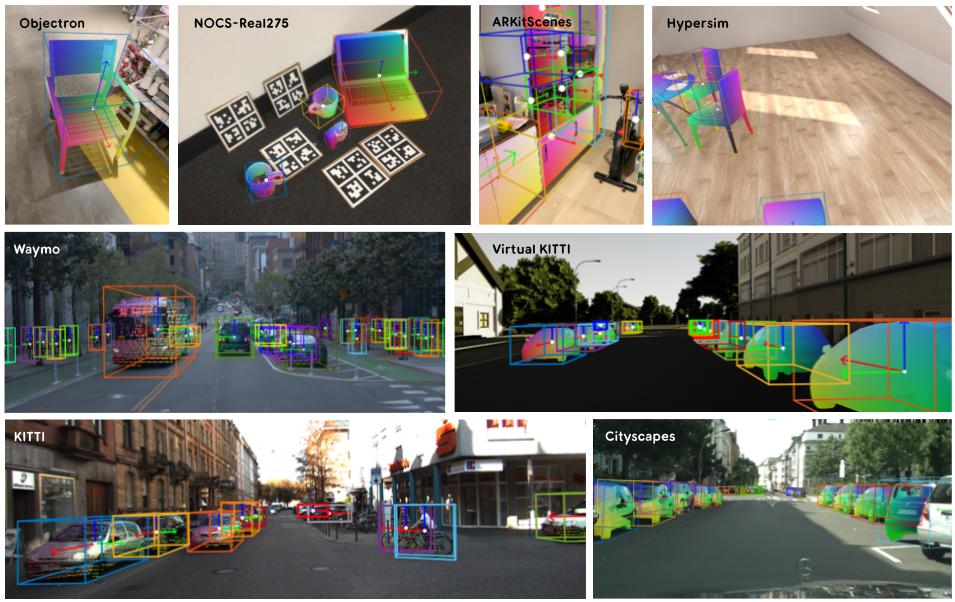

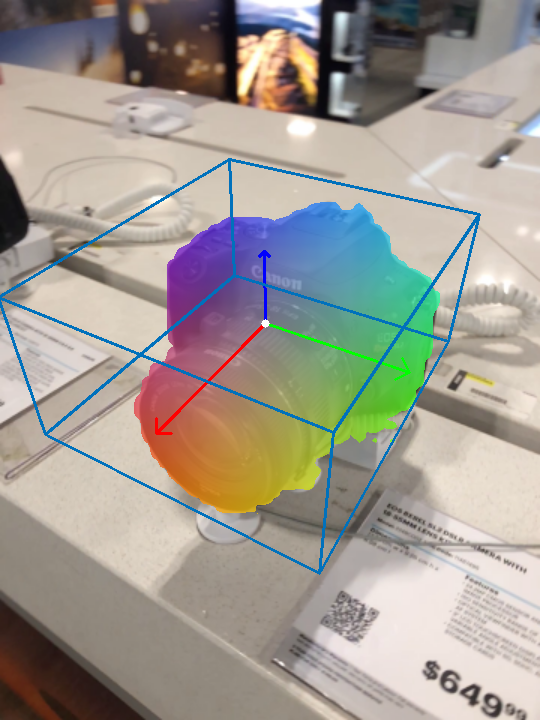

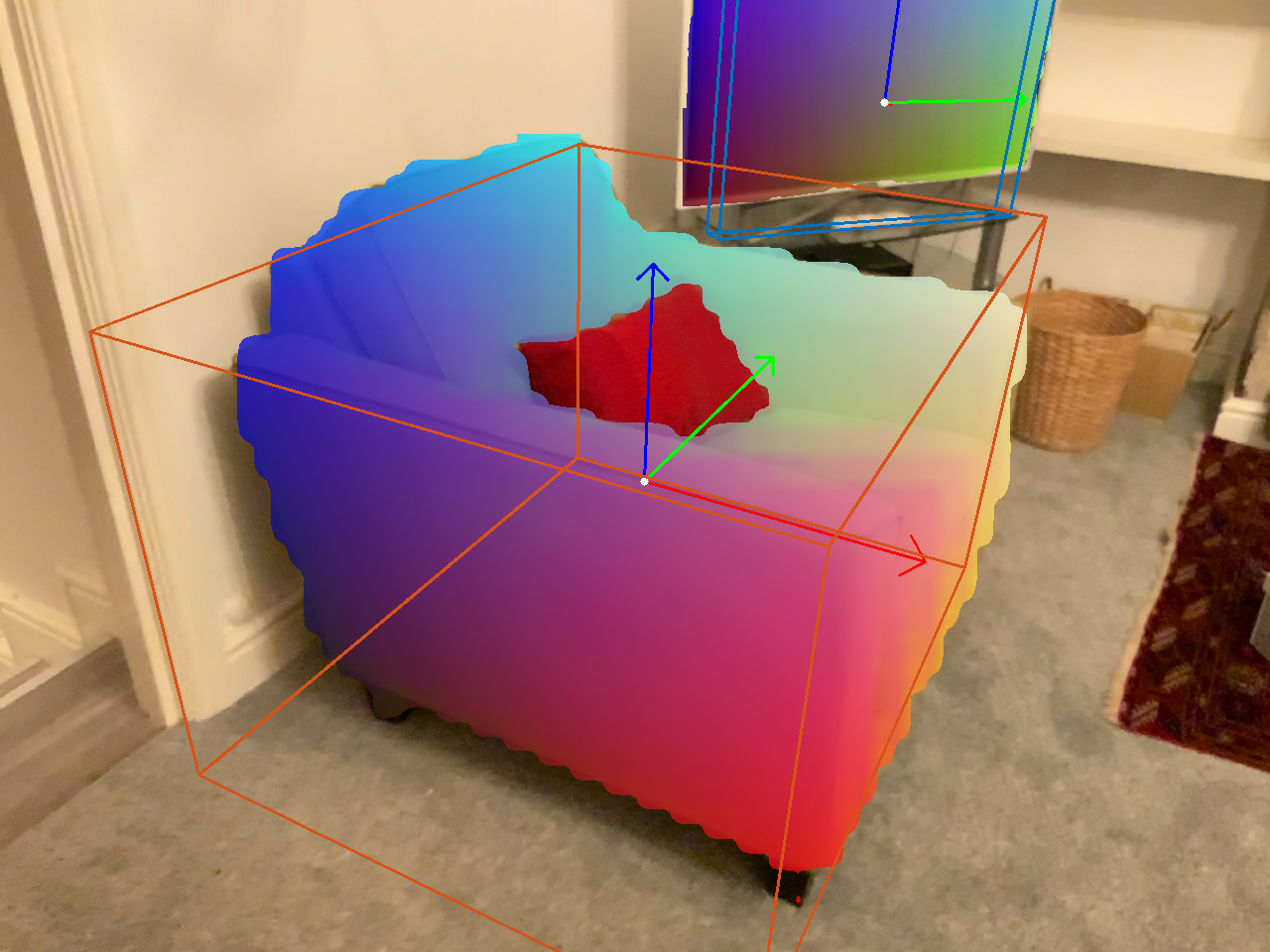

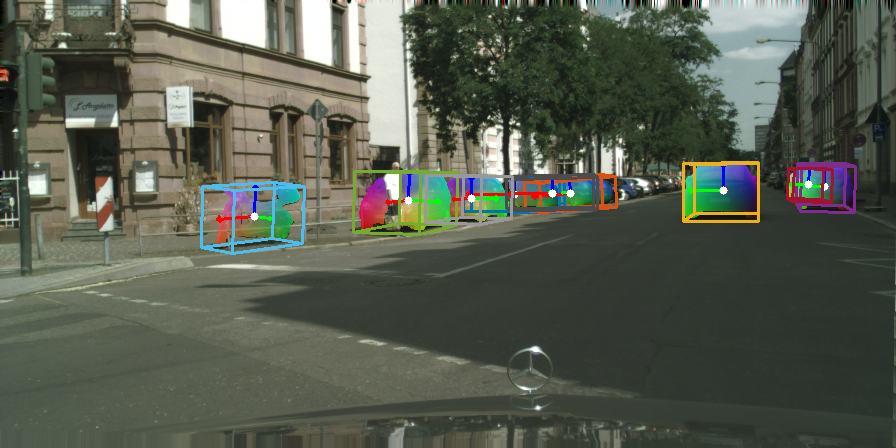

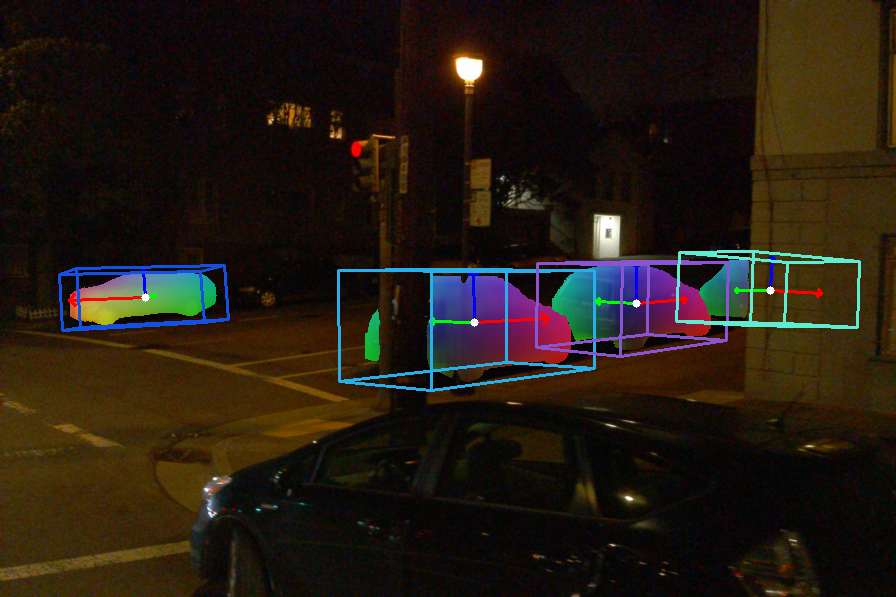

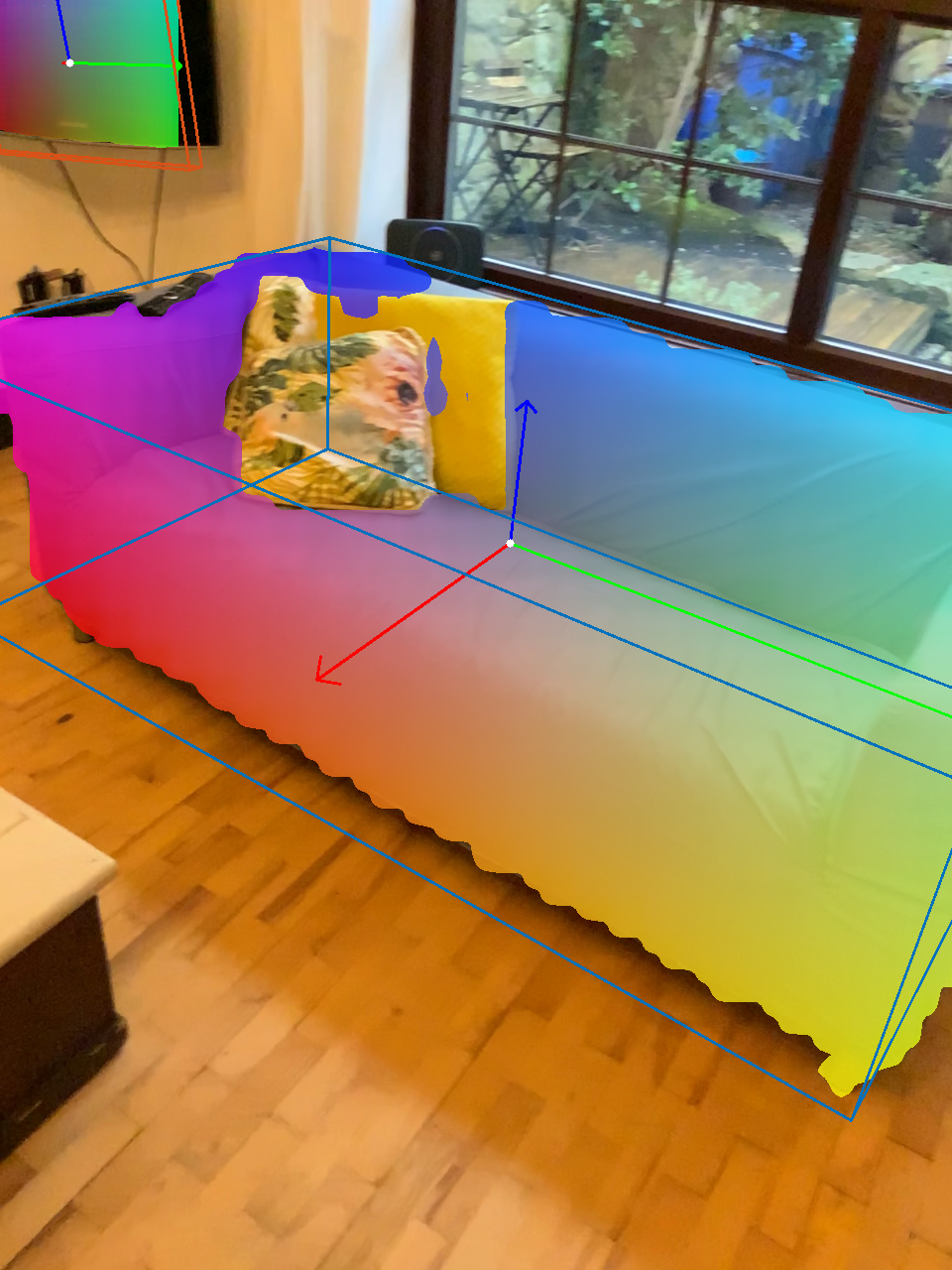

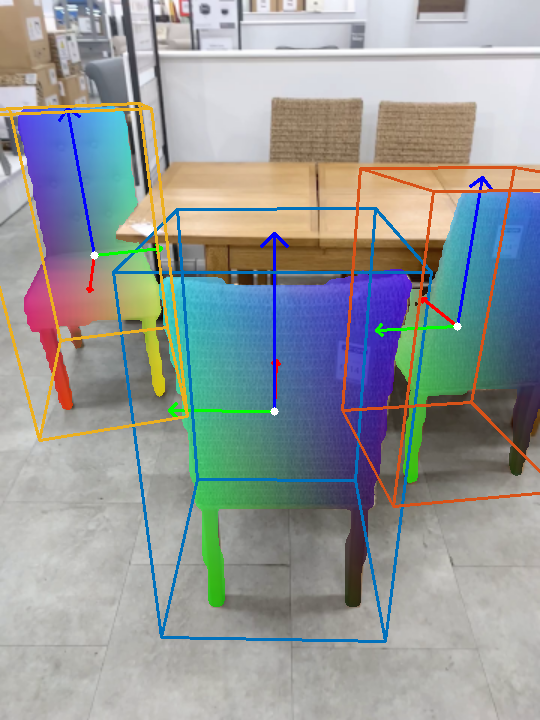

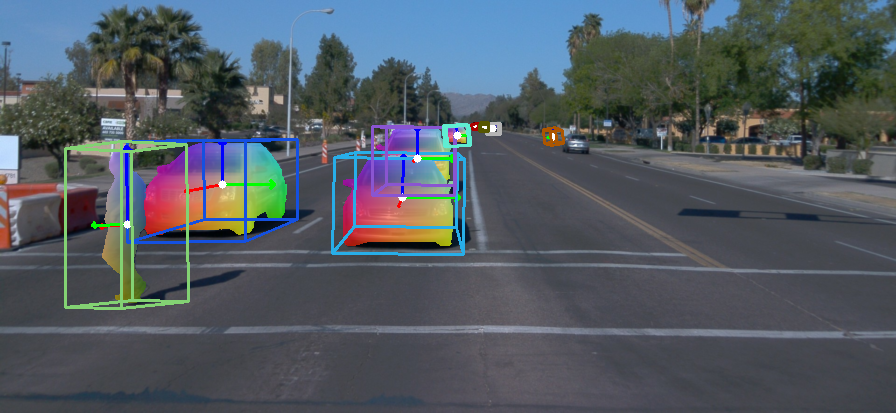

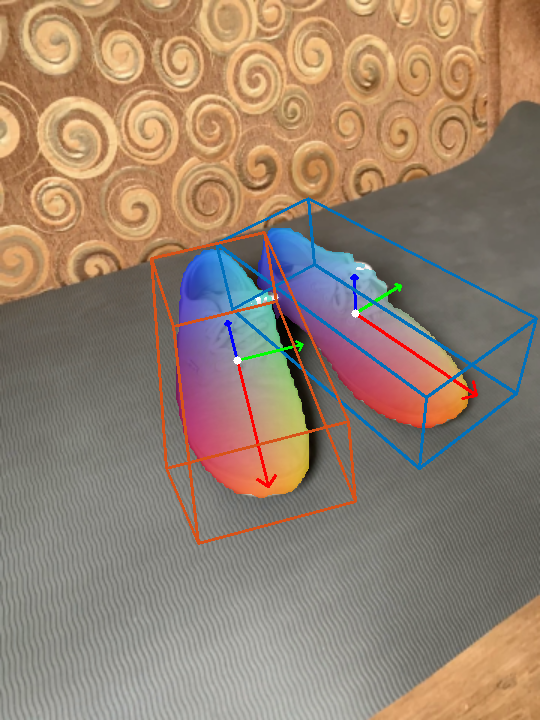

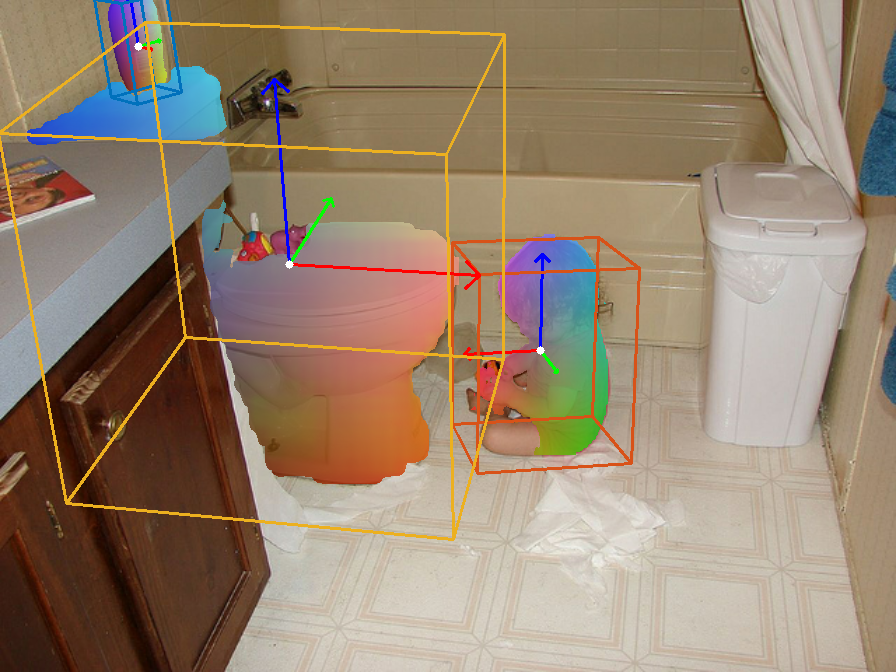

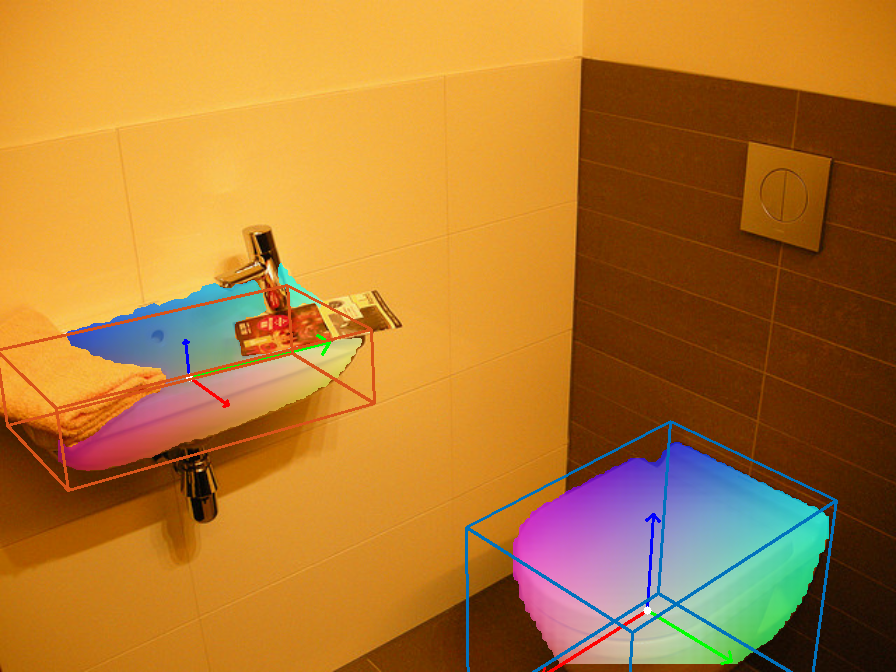

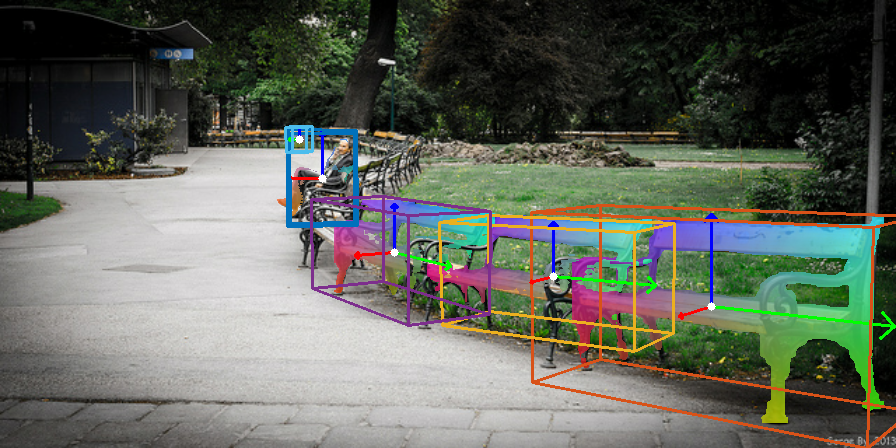

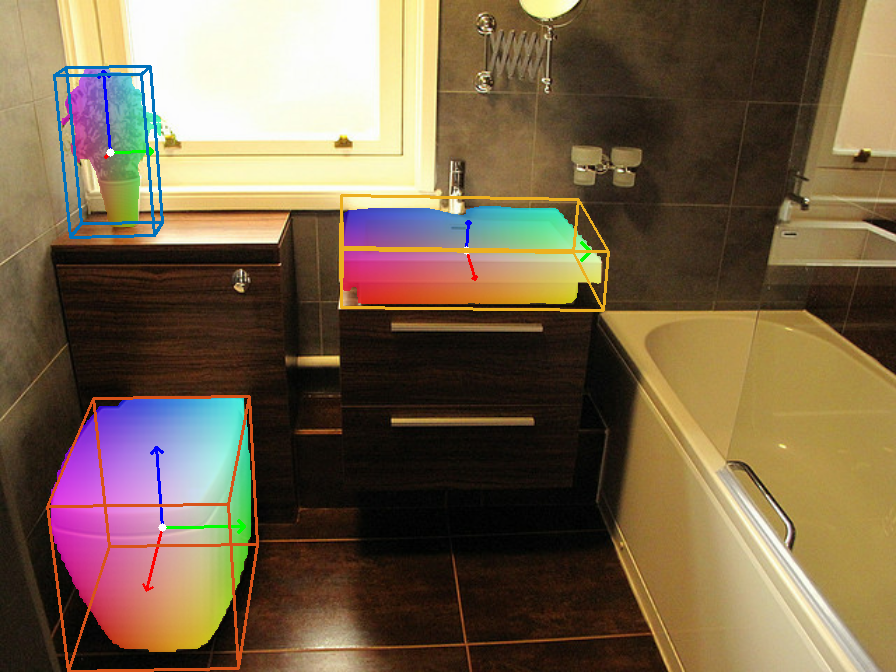

We propose OmniNOCS, a large-scale monocular dataset with 3D Normalized Object Coordinate Space (NOCS) maps, object masks, and 3D bounding box annotations for indoor and outdoor scenes. OmniNOCS has 20 times more object classes and 200 times more instances than existing NOCS datasets (NOCS-Real275, Wild6D).

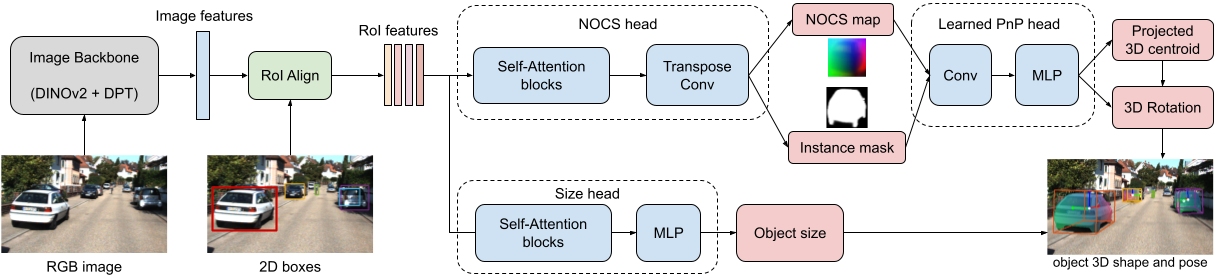

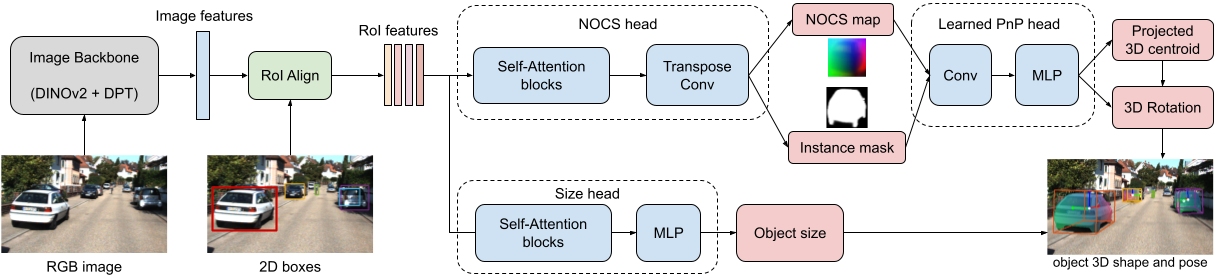

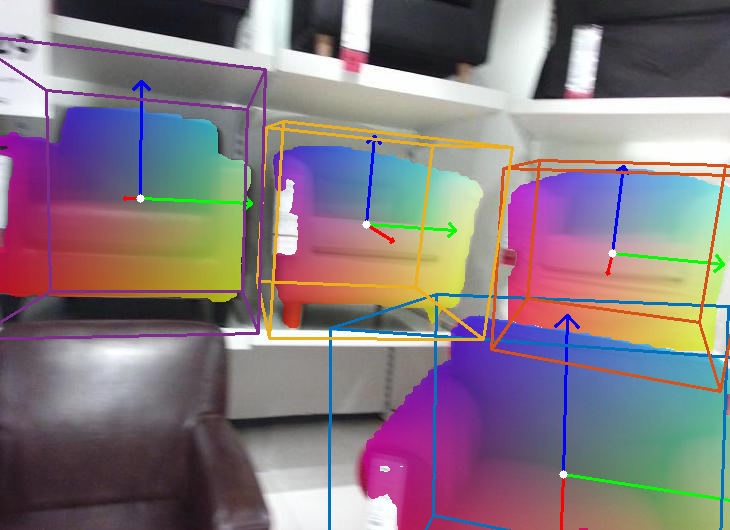

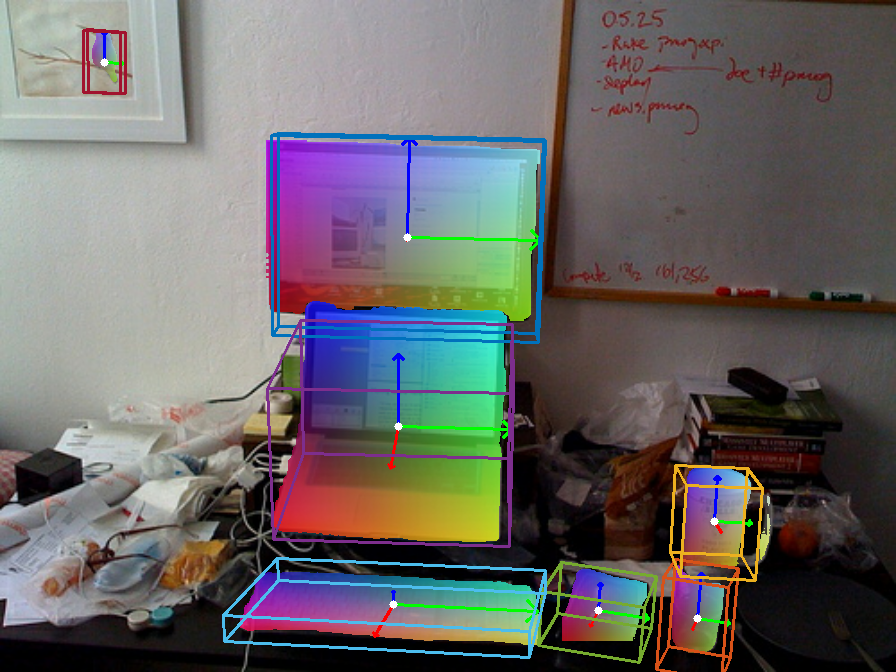

We use OmniNOCS to train a novel, transformer-based monocular NOCS prediction model "NOCSformer" that can predict accurate NOCS, instance masks and poses from 2D object detections across diverse classes. It is the first NOCS model that can generalize to a broad range of classes when prompted with 2D boxes. We evaluate our model on the task of 3D oriented bounding box prediction, where it achieves comparable results to state-of-the-art 3D detection methods such as CubeRCNN. Unlike other 3D detection methods, our model also provides detailed and accurate 3D object shape and segmentation. We propose a novel benchmark for the task of NOCS prediction based on OmniNOCS, which we hope will serve as a useful baseline for future work in this area.

Omni3D: A Large Benchmark and Model for 3D Object Detection in the Wild introduced a large-scale cross-domain 3D object detection dataset that inspired our work.

Normalized Object Coordinate Space for Category-Level 6D Object Pose and Size Estimation introduced the concept of NOCS and its applications in 6D pose estimation.